Note: This is to the specification only.

Lexical Analysis

Lexer → Lexenes → Tokens → Token Stream → Symbol Table

Lexens

Lexens are an intermediary stage between the source and tokens. It is effectively a segment waiting to be tokenised.

Symbol Table

There is only one symbol table which does not store duplicate entries and uses simple indexing instead of hashing for performance.

The token stream feeds into this.

Syntax Analysis

Syntax Diagram → Abstract Syntax Tree → update Symbol Table (missing data)

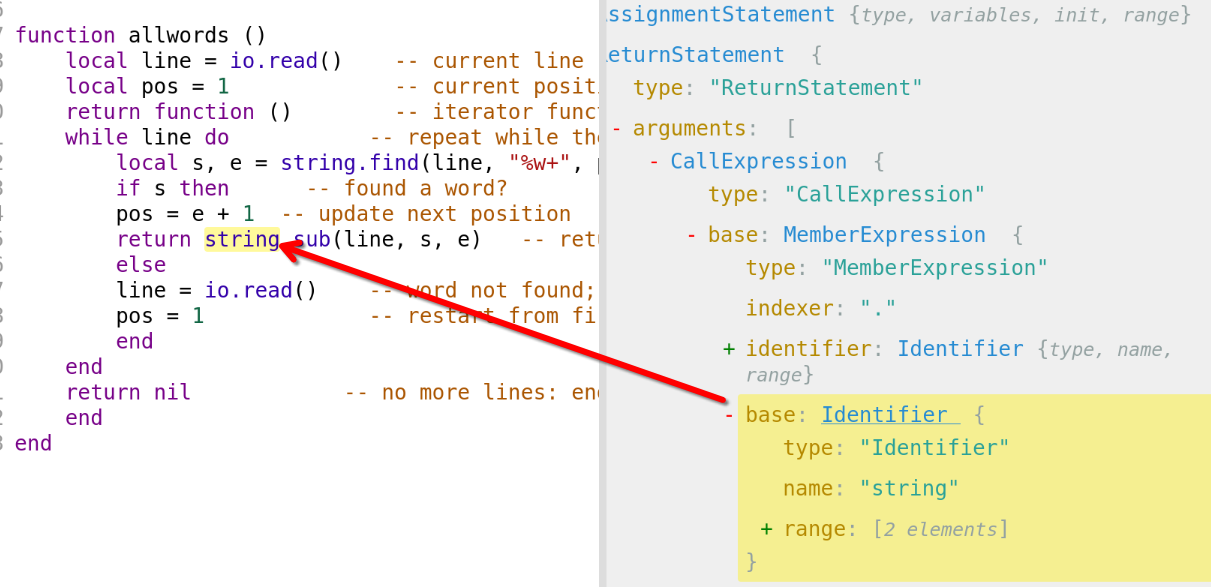

Abstract Syntax Tree (AST)

A tree with an outlined set of possible routes & values which abstractly represents the syntax, generated from the tokens.

Code Generation and Optimisation

During code generation, the tokens are transformed into object code. They may be optimised prior or throughout (depending on the compiler, some may do both).

Optimisation Example

Rules:

- removal of redundant operations whilst producing object code that achieves the same effect as the source program

- removal of unused subroutines

- removal of unused variables and constants

my_old_num = 6 # unused var

def multiply(a, b): # unused subroutine

return a * b

my_num = 7

mult = int(input("Multiplier: "))

my_num = 7 # redundant operation

print(mult * my_num)would then become (representation):

# removed unused var

# removed unused subroutine

my_num = 7

mult = int(input("Multiplier: "))

# removed reundant operation

print(mult * my_num)